AI Lessons in Higher Education

These AI lessons introduce students to the opportunities, risks, and ethical considerations of using generative AI tools in higher education, helping them understand how to apply AI responsibly in academic contexts. These lessons combine reading, writing, discussion, and critical evaluation tasks that support students in reflecting on AI’s role in learning and the importance of academic integrity. By integrating AI into structured academic activities, learners develop both practical digital literacy and deeper critical thinking skills needed for university study and future professional environments (Wilson, 2026).

RESOURCES: Reading / Writing / Listening / Speaking / Argument / SPSE / Reports / Process Writing / Reading Tests / Summary / Grammar / Vocab / Critical Thinking / Instant Lessons / Medical English / English for Art / A.I Lessons / Graphs & Charts / New 2026 /

Reading Skills Worksheets / Lessons

Click on any link to be taken to the download

Introduction to A.I

Introduction to A.I

A.I Student Check List

A.I Lecture Listening Test

A.I Research Article

AI Lesson Download

Introduction to AI

An Introduction to AI in the Classroom [new 2025]

This lesson introduces students to the key opportunities, risks, and ethical considerations of using generative AI in higher education. Through reading, writing, and discussion tasks, students learn how to apply AI responsibly, evaluate its limitations, and understand institutional policies. EXAMPLE Level ***** [B1/B2/C1] WEBPAGE TEACHER MEMBERSHIP / INSTITUTIONAL MEMBERSHIP

AI Student Checklist

AI Student Checklist [new 2025]

This Student AI Checklist helps learners use generative AI safely, ethically, and within university guidelines. It is important because it promotes academic integrity, protects personal data, and ensures students remain responsible for their own understanding and original work. Level ***** [B1/B2/C1] INFORMATION WEBPAGE TEACHER MEMBERSHIP / INSTITUTIONAL MEMBERSHIP

AI in Higher Education Lecture

A short lecture on the opportunities, risks and assessment challenges of AI in Higher Education

If you can’t access this YouTube video in your country, go here

AI Lecture Listening Test

AI Lecture Listening Test Worksheet [new 2025]

This listening lecture lesson examines how generative AI is reshaping higher education by exploring its opportunities, risks, and implications for assessment design. Students take notes using the PPT slide and then answer a range of questions: open, matching, multiple-choice, and critical thinking. EXAMPLE Level *****[B2/C1] VIDEO [14.30] / MP3 / PPT link in download / TEACHER MEMBERSHIP / INSTITUTIONAL MEMBERSHIP

Lesson Downloads

How to use A.I responsibly in academic writing

A short lecture on using AI as a support tool in writing rather than a creator or writer.

If you can’t access this YouTube video in your country, go here

Worksheet Download: click here

AI-Supported Academic Writing 10-Lesson Workbook

FREE SAMPLE LESSON 1: Sentence Accuracy – Identifying Errors [new 2026]

Lesson 1 focuses on developing sentence-level grammatical accuracy, particularly subject–verb agreement and singular/plural nouns, using short academic sentences on digital learning as practice. It introduces controlled use of AI to help students check and reflect on their own corrections, laying the foundation for the remaining lessons in the ten-lesson sequence.Level ***** [B1/B2/C1] TEACHER MEMBERSHIP / INSTITUTIONAL MEMBERSHIP

AI-supported Academic Writing 10-Lesson Workbook [new 2026]

The AI-Supported Writing Workbook helps international students develop clear, accurate academic writing for university study. Lessons progress from sentence-level accuracy to paragraph-level writing, using a neutral academic theme focused on education in the digital age. AI tools are used in a controlled way to support checking, reflection and critical evaluation rather than content generation. LESSON EXAMPLE. Level ***** [B1/B2/C1] TEACHER MEMBERSHIP / INSTITUTIONAL MEMBERSHIP

How to use A.I responsibly in academic reading

A short lecture on using AI as a support tool in reading rather than just asking it for answers.

If you can’t access this YouTube video in your country, go here

Worksheet Download: click here

AI-Supported Academic Reading 10-Lesson Workbook

FREE READING LESSON 4: Recognising writer’s stance and hedging language [new 2026]

Lesson 4 focuses on recognising a writer’s stance in academic texts, including whether a position is positive, cautious, or critical. Students identify common hedging language and learn to distinguish between strong claims and cautious academic claims using short digital-learning texts, supporting more critical reading in later lessons. Level ***** [B1/B2/C1] TEACHER MEMBERSHIP / INSTITUTIONAL MEMBERSHIP

AI-supported Academic Reading 10-Lesson Workbook [new 2026]

AI-supported Academic Reading 10-Lesson Workbook [new 2026]

The AI-supported academic reading workbook helps international students develop core academic reading skills for university study. Lessons move from sentence-level understanding to paragraph and text analysis, using neutral academic themes linked to digital education. Students practise identifying main ideas, supporting details, reference, cohesion, and stance. AI tools are used in a controlled way to support checking and reflection rather than replace reading, supporting accurate comprehension, critical reading, and academic judgement. LESSON EXAMPLE Level ***** [B1/B2/C1] TEACHER MEMBERSHIP / INSTITUTIONAL MEMBERSHIP

More AI Lesson Downloads

TED TALK: AI in the Real World

How AI will step off the screen and into the real world – Daniela Russ (2024)

This inspirational TED talk discusses how the convergence of AI and robots will unlock a wonderful world of new possibilities in everyday life. The listening tests includes multiple choice, short answer questions, sentence completion, table completion and summary completion. Example. Level: ***** [B1/B2/C1] / Video [12:54] TEACHER MEMBERSHIP / INSTITUTIONAL MEMBERSHIP

TED TALK: Losing Control of A.I

Losing control of Artificial Intelligence (AI)? Sam Harris

TED Talk: An informative talk about the worries of A.I and how we fail to address the seriousness of what A.I could become. Listening worksheets use a range of test type questions (T/F/NG, open questions, information gap fills, table completion, summaries, etc..). Example of test Level: ***** [C1] / Video [14:27] TEACHER MEMBERSHIP / INSTITUTIONAL MEMBERSHIP

TED TALK: A.I Save Humanity

How can A.I save humanity? – Kai Fu Lee

TED TALK: This lecture discusses how China has embraced A.I technology and is accelerating its advancement. It focuses on the main challenges that we all face with an A.I future and how it will positively compliment our lives.The lesson includes teacher’s notes, comprehension questions, critical thinking questions and an answer key. Example. Level: ***** [B2/C1] / Video [14:42] TEACHER MEMBERSHIP / INSTITUTIONAL MEMBERSHIP

AI Research Article

Research Article: Generative AI In Higher Education: Opportunities, Risks and Assessment Design By C. Wilson (2025)

RESEARCH: This overview was created by analysing current guidance and evidence from the UK Government alongside policies and practice papers from leading UK universities: Glasgow, Leeds, Reading, Sussex, Manchester, Edinburgh, King’s College London, Leicester, Liverpool and Newcastle. Synthesising these sources enabled an in-depth, UK-focused analysis of generative AI in higher education, covering opportunities, risks, academic integrity, assessment design, and student use.

- 1. Gen AI

- 2. Applications

- 3. Limitations

- 4. Assessment

- 5. Paper & Pen Tests

- 6. Traffic Light System

- 7. Dos & Don'ts

- 8. Language Courses

- 9. Conclusion

- 10. Reference List

1. What is Generative AI?

Generative artificial intelligence (Gen AI) in education refers to tools such as ChatGPT, Google Gemini, Microsoft Copilot, Deep Seek, Grammarly and Midjourney that create new content including text, images, code, and simulations to enhance and personalise teaching and learning. These technologies can support automated feedback, lesson design, adaptive tutoring, and the creation of realistic scenarios. However, they also carry risks such as spreading misinformation, raising ethical concerns, and weakening critical engagement when used without careful evaluation (1-5).

AI: umbrella term for all forms of artificial intelligence.

Generative AI: a specific type of AI designed to produce new data, not just process or recognise it.

2. Applications of Generative AI in Education

AI tools can enhance students’ learning by offering personalised support, creative ideas, and instant feedback. The following examples show how AI can be used to develop understanding, improve productivity, and make studying more interactive and inclusive. (1,4,5).

- Personalised learning:Use AI tools to adapt study pace, review key topics, and focus on areas students find difficult.

- Content exploration:Generate study notes, quizzes, summaries, and multimedia explanations to support understanding.

- Virtual tutoring:Ask AI for one-to-one explanations, examples, or feedback when revising or preparing assignments.

- Simulations and scenarios:Practise real-world situations or case studies created by AI to apply theory to practice.

- Assessment and feedback:Use AI for self-checking, receiving formative feedback, or tracking your learning progress.

- Accessibility:Get complex texts simplified, translated, or converted to accessible formats such as alt text or audio.

- Text manipulation:Summarise readings, paraphrase key points, or reformat notes into tables or outlines.

- Idea generation:Brainstorm essay topics, argument structures, or report outlines to plan your writing.

- Data analysis and visualisation:Turn data into clear charts, graphs, or infographics for presentations or reports.

- Productivity support:Organise tasks, plan study schedules, and manage time more efficiently with AI assistance.

3. Limitations and Risks of Generative AI in Education

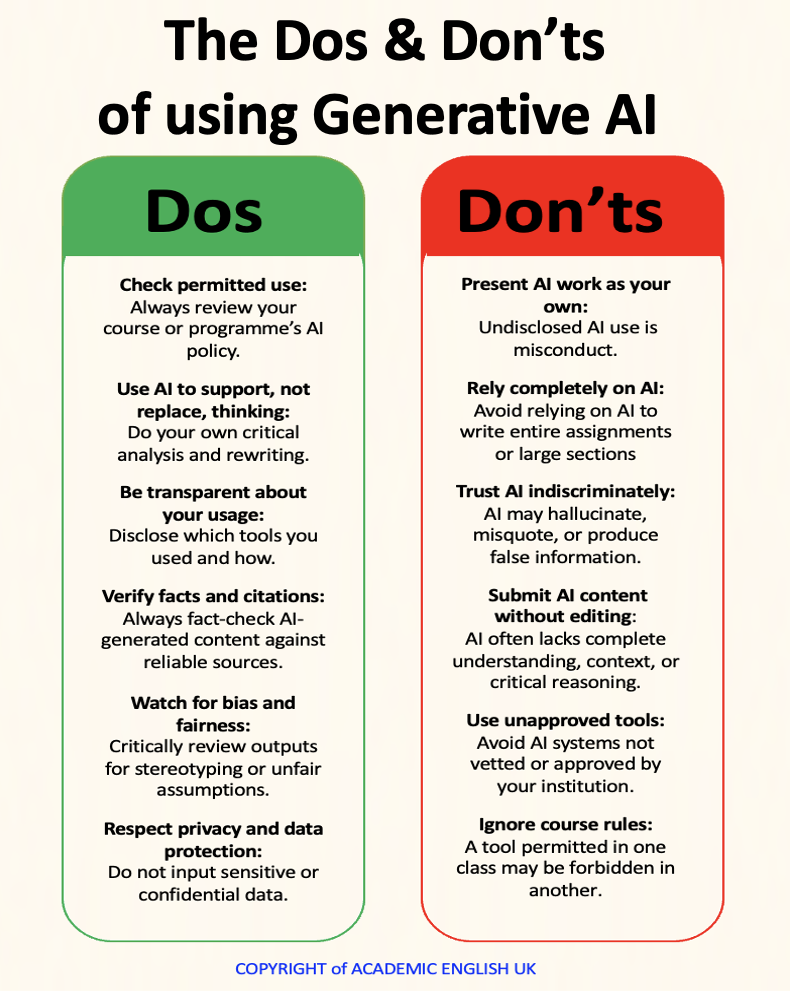

Generative AI provides opportunities such as personalised learning and content creation, but it also poses risks including misinformation, bias, and privacy concerns, which require responsible and critical use (2-5).

Reliability and Accuracy

AI often produces errors or misleading outputs because it cannot reliably distinguish truth from falsehood. In some cases, it fabricates information, known as hallucinations, including false references that appear credible but do not exist. Performance can also decline over time through a process called algorithmic drift. At present, this occurs when accuracy and reliability decrease because the system continually repeats and recycles the same information, reinforcing patterns and errors rather than adapting to new or changing data (4,5).

Bias and Fairness

AI systems are trained on vast datasets that may include stereotypes, cultural biases, or discriminatory patterns, which are often reproduced and even amplified in their outputs. There is a lack of transparency in how these systems generate responses, often described as the black box problem, which limits accountability and a clear insight into decision-making processes. Generally, AI presents information in a confident and authoritative manner, which can encourage users to accept flawed or misleading content without applying sufficient critical evaluation (2,4).

Academic Integrity

By producing work with AI that does not reflect their own understanding, students risk committing plagiarism or academic dishonesty and graduating without genuine expertise or professional competence. In fact, such excessive reliance contradicts university pedagogical principles, as it weakens critical thinking, problem-solving, and independent learning skills (6,7).

Data Privacy and Compliance

AI systems often process sensitive personal or research data, raising risks of breaches, surveillance, or misuse. Involving third party vendors, which are external organisations that store, manage, or analyse data on behalf of an institution, introduces additional risks of interception, unauthorised access, or exposure outside the institution’s direct control. Many AI systems are trained on copyrighted material without permission, which raises questions about intellectual property, unclear ownership of outputs, and potential legal disputes. Furthermore, accessibility and equality requirements must be addressed to ensure full compliance with General Data Protection Regulation (GDPR) and Data Protection Act (DPA) obligations (3,5).

Ethical and Social Concerns

AI outputs may conflict with institutional values or misalign with ethical standards. In particular, overuse of AI, where automated systems are relied upon more than human judgment, can restrict academic freedom and discourage independent perspectives. This dependence may also reduce creativity and undermine professional expertise by replacing original thought and specialist knowledge with formulaic outputs. Staff–student relationships may also decline if AI is seen as replacing human roles, reducing trust, mentorship, and personal interaction. As a result, students may feel less supported or valued, while staff may experience a loss of professional identity and authority, ultimately weakening the sense of academic community (3,8).

Business and Reputational Risk

Failure to implement responsible AI innovation may compromise a university’s reputation and diminish its competitive position, while inadequate governance or misuse can erode institutional trust and credibility. Universities that adopt inconsistent or poorly defined AI policies risk exposure to privacy breaches, intellectual property disputes, and heightened public scrutiny (9).

4. Designing AI Resilient Assessments

Assessments most vulnerable to AI are formulaic, decontextualised, and focused on reproducing surface level knowledge. These are precisely the tasks that AI is designed to perform. The aim is not to create assessments that AI cannot complete, since this threshold continually shifts, but to emphasise human judgement, lived experience, contextual understanding, ethical reasoning, and creativity (10,11,12).

AI resilient assessment design combines selective invigilation, live components, contextualised and process-based tasks, collaboration, ethical judgment, experiential learning, and guided AI use to promote integrity, critical thinking, and authentic engagement (6–8).

Invigilated and On-Campus Components

- Incorporate supervised or invigilated elements such as in-person exams, vivas, or practical demonstrations to confirm authorship and understanding.

- Use theOn-Campus Sign-Off Method or short oral defences to verify student ownership of submitted work.

Contextual and Localised Assessment Tasks

- Design assignments that draw on module-specific readings, seminars, or case studies.

- Require reference to local data, examples, or institutional contexts that AI systems are less able to replicate accurately.

Interlinked and Developmental Assessment

- Create portfolios or linked assessments across a module or programme to assess coherence and sustained learning.

- Ask students to provide annotated drafts, research notes, or reflective commentaries that demonstrate process and progression.

Higher-Order Thinking and Critical Engagement

- Emphasise skills such as analysis, synthesis, and evaluation in both task design and marking rubrics.

- Use criteria that reward original argumentation, integration of theory, and engagement with course materials, reducing the grade potential of generic AI outputs.

Authentic and Scenario-Based Tasks

- Frame assignments around realistic case studies, simulations, or applied problems that include specific constraints (defined time limits, limited data sets, local examples, or scenario-based conditions).

- Ask students to adapt content for different audiences or purposes to demonstrate audience awareness and critical reflection.

- Include opportunities for personal insight or experience, connected to academic evidence and theoretical frameworks.

Incorporation of AI within Assessment

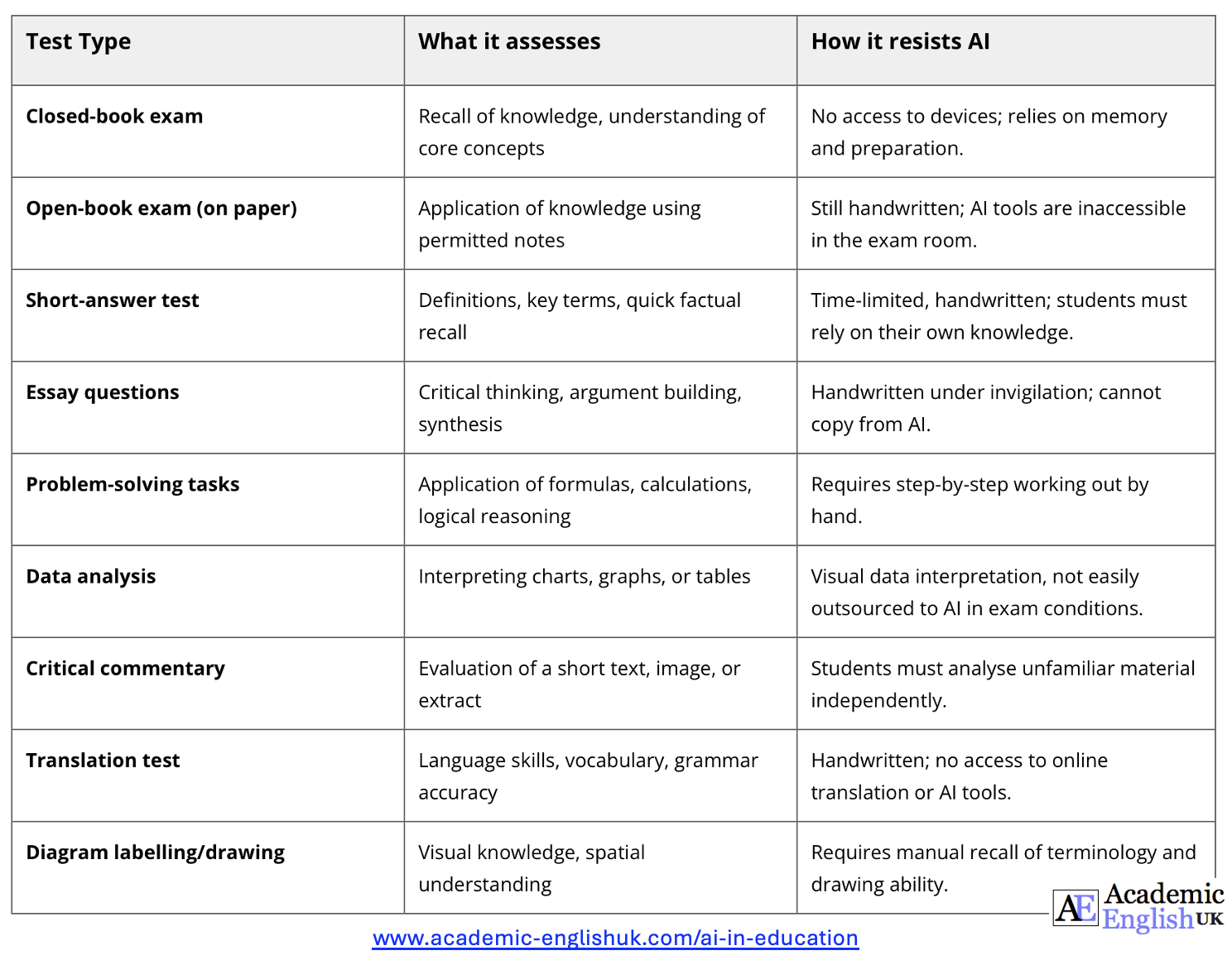

- Allow or require guided AI use under a traffic light system of “Amber” or “Green” conditions, where students critically evaluate AI outputs or demonstrate effective prompting.

- Tasks may include comparing AI and human writing, improving AI-generated drafts, or reflecting on the ethical implications of AI use.

Programme-Level and Institutional Approaches

- Embed AI resilience across entire programmes through a balanced mix of supervised, process-based, and long-form assessments.

- Review assessment structures regularly with educational developers to ensure alignment with emerging AI capabilities and institutional policies.

- Provide staff development through workshops and communities of practice on authentic assessment design and AI literacy.

- Engage students in discussions and learning activities that clarify acceptable AI use, fostering transparency and shared responsibility for academic integrity

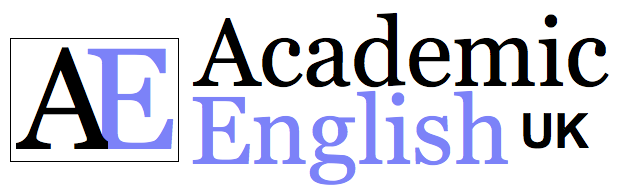

5. Pen & Paper Tests

Some universities are turning back to using handwritten, invigilated assessments to ensure authenticity and prevent student reliance on AI. For example:

The University of Liverpool emphasises that assessments must “never replace original thought, independent research, and the production of original work,” recommending traditional, invigilated, handwritten formats as part of a wider strategy to safeguard authenticity and uphold academic integrity (14).

Newcastle University recommends using assessment formats that “are less vulnerable to AI misuse, such as invigilated, in-person pen and paper exams, to preserve academic integrity in a world where generative AI is widely accessible” (15).

6. The Gen AI Traffic Light System

The Gen AI Traffic Light System sets clear policy on academic integrity by defining when AI use is prohibited (red), allowed in an assistive role (amber), or required as part of the assessment (green). It ensures students use AI responsibly, supporting learning without undermining originality, fairness, or academic standards (6,9,12).

8. AI in English Language Courses

So, where does this leave English language courses? Ultimately, the decision rests on the pedagogical principles underpinning the course. What are the intended learning outcomes? For instance, if the goal is to raise a student’s IELTS score from 5.5 to 6.5, the emphasis may need to fall on the mechanical aspects of language development to a certain extent. This raises a series of pressing questions given that EAP is heavily skills-based, so, to what extent should students be permitted to use AI, and how can tutors regulate this? The challenges are significant, and policies must remain both clear and flexible in response to an ever-evolving AI environment.

Specific questions highlight the complexity: Is it acceptable to use AI for translating academic texts, generating outlines, or employing paraphrasing and referencing tools? To what degree should students rely on assistive AI such as Grammarly or MS Word’s built-in checkers? Additionally, to what extent may they ‘polish’ their work before it compromises authorship? Even more challenging, how can tutors reliably determine what proportion of the work is genuinely the student’s own, especially when AI can now be trained to reproduce the linguistic errors of a B2-level learner.

Possible solutions include:

- Reintroduction of Traditional Methods: Reinstate pen-and-paper classroom activities such as defining terminology, paraphrasing, summarising, note-taking, and vocabulary development. These practices not only reinforce core linguistic skills but also enable the creation of a Tutor Portfolio, wherein handwritten work can serve as verifiable evidence in cases of suspected AI-related academic misconduct.

- Defining Acceptable AI Use: Establish clear parameters for the permissible use of generative AI within language learning contexts. For example, AI tools may be utilised for surface-level correction; such as grammar, vocabulary, and punctuation but must not be employed for generating original content or completing substantive academic tasks.

- Assessing Student Comprehension: Integrate assessment methods that directly evaluate a student’s understanding of subject matter through oral formats, including vivas, oral defences, and structured in-person verification procedures such as the On-Campus Sign-Off Method: It typically involves students drafting, editing, or finalising their assignments under supervised conditions.

- Institutional AI Policy Framework: Develop and implement a comprehensive AI policy at institutional level, incorporating an “AI Traffic Light” system that clearly delineates acceptable and prohibited uses. All students should be required to sign an AI usage declaration at the beginning of their course to ensure informed compliance.

- Data Protection and Privacy Compliance: Promote awareness of data security and ensure full adherence to GDPR and UK data protection regulations. Student work, instructional materials, and personal data must not be uploaded to AI platforms without explicit consent and appropriate safeguards.

- AI Literacy and Risk Awareness: Embed AI training modules within the curriculum to educate students on the limitations, ethical considerations, and potential risks associated with over-reliance on AI technologies. These sessions should provide practical strategies for mitigating misuse and fostering responsible engagement.

9. Conclusion

Generative AI is reshaping higher education by introducing new possibilities for personalising learning, streamlining teaching, and supporting accessibility, while simultaneously creating fresh challenges around accuracy, ethics, and academic integrity. The analysis of UK Government guidance and policies from leading universities demonstrates that responsible integration requires more than simply regulating tool use; it demands a shift in assessment design, with greater emphasis on human judgement, lived experience, and critical engagement. Frameworks such as the AI Traffic Light System provide clarity on when and how AI may be used, while the adoption of secure tools like Microsoft Copilot highlights how institutions can support innovation without compromising data privacy or fairness. Moving forward, universities must strike a balance between embracing AI’s opportunities and protecting the values that underpin academic study.

10. Reference List

- Department for Education. Generative artificial intelligence (AI) in education [Internet]. GOV.UK; 2023 [cited 2025 Sep 26]. Available from:https://www.gov.uk/government/publications/generative-artificial-intelligence-in-education/generative-artificial-intelligence-ai-in-education

- University of Glasgow. Artificial intelligence in learning: important limitations and problems [Internet]. 2024 [cited 2025 Sep 26]. Available from:https://www.gla.ac.uk/myglasgow/sld/ai/students/#ai%3Aimportantlimitations%2Cimportantproblems

- UK Parliament. Artificial intelligence: education and impacts on children and young people [Internet]. Parliamentary Office of Science and Technology (POST); 2023 [cited 2025 Sep 26]. Available from:https://post.parliament.uk/artificial-intelligence-education-and-impacts-on-children-and-young-people/

- University of Reading. Generative AI and university study: limitations [Internet]. LibGuides; 2024 [cited 2025 Sep 26]. Available from:https://libguides.reading.ac.uk/generative-AI-and-university-study/limitations

- University of Edinburgh. Introducing AI in our learning technology [Internet]. Information Services; 2024 [cited 2025 Sep 26]. Available from:https://information-services.ed.ac.uk/learning-technology/more-about-learning-technology/introducing-ai-in-our-learning-technology-1

- King’s College London. Authentic assessment: approaches to assessment in the age of AI [Internet]. 2024 [cited 2025 Sep 26]. Available from:https://www.kcl.ac.uk/about/strategy/learning-and-teaching/ai-guidance/approaches-to-assessment/authentic-assessment

- University of Sussex. Developing writing assignments [Internet]. Staff guidance; 2024 [cited 2025 Sep 26]. Available from:https://staff.sussex.ac.uk/teaching/enhancement/support/assessment-design/developing-writing-assignments

- Wheeler S. Designing AI resilient assessment [Internet]. University of Manchester; 2024 [cited 2025 Sep 26]. Available from:https://personalpages.manchester.ac.uk/staff/stephen.wheeler/blog/0024_designing_ai_relilient_assessment.htm

- University of Leeds. GenAI quick checklist [Internet]. Generative AI at Leeds; 2024 [cited 2025 Sep 26]. Available from:https://generative-ai.leeds.ac.uk/ai-and-assessments/gen-ai-quick-checklist/

- University of Edinburgh. Using generative AI: guidance for students [Internet]. Information Services; 2024 [cited 2025 Sep 26]. Available from:https://information-services.ed.ac.uk/computing/communication-and-collaboration/elm/generative-ai-guidance-for-students/using-generative

- Biesta G. The beautiful risk of education. Boulder: Paradigm Publishers; 2013.

- Freire P. Pedagogy of the oppressed. New York: Herder and Herder; 1970

- Academic quality and standards [Internet]. University of Leicester; [cited 2025 Sep 26]. Available from:https://le.ac.uk/policies/quality

- University of Liverpool. Guidance on the Use of Generative AI (Teach, Learn & Assess) [Internet]. 2024 [cited 2025 Sep 28]. Available from:https://www.liverpool.ac.uk/media/livacuk/centre-for-innovation-in-education/digital-education/generative-ai-teach-learn-assess/guidance-on-the-use-of-generative-ai.pdf

- Newcastle University. AI in Assessment [Internet]. Newcastle: Newcastle University; 2023 [cited 2025 Sep 28]. Available from:https://www.ncl.ac.uk/learning-and-teaching/effective-practice/ai/ai-in-assessment/

- Moorhouse BL. Generative AI tools and assessment: Guidelines of the HE sector.Computers and Education: Artificial Intelligence [Internet]. 2023;5:100179. Available from: https://www.sciencedirect.com/science/article/pii/S2666557323000290

Writing

online resources

Grammar

online resources

Writing Skills

online resources

AI Lessons

online resources

New for 2026

online resources

Readings

online resources

Vocabulary

online resources

Reports

online resources

Art

online resources

6-Week

Members only

Listening

online resources

Correction

online resources

Argument

online resources

Medical

online resources

OneDrive

Members only

Speaking

online resources

Feedback

online resources

SPSE Essays

online resources

Topic-lessons

online resources

AEUK Blog

online resources

Criticality

online resources

Criteria

online resources

Graphs

online resources

Instants

online resources

Free

online resources